VISNOTATE: An Opensource tool for Gaze-based Annotation of WSI Data

Introduction

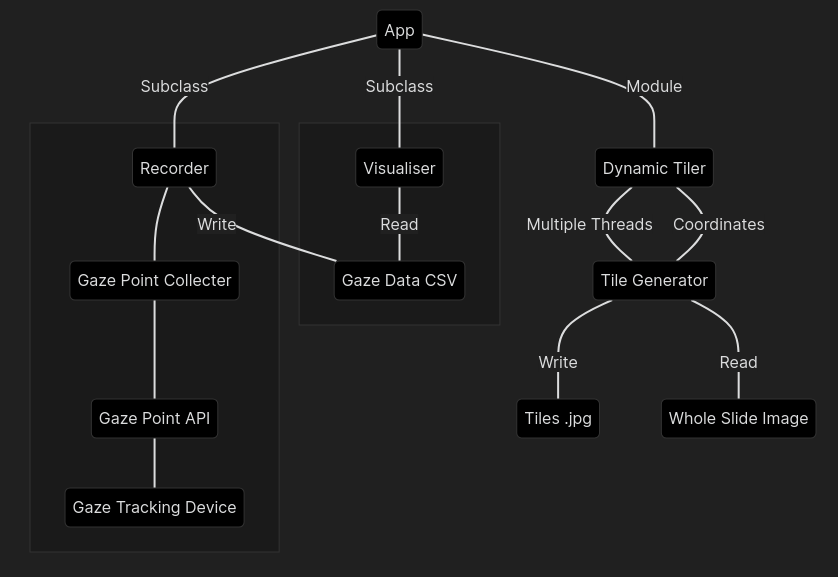

This repo contains the source code for 'Visnotate' which is a tool that can be used to track gaze patterns on Whole Slide Images (WSI) in the svs format. Visnotate was used to evaluate the efficacy of gaze-based labeling of histopathology data. The details of our research on gaze-based annotation can be found in the following paper:

- Komal Mariam, Osama Mohammed Afzal, Wajahat Hussain, Muhammad Umar Javed, Amber Kiyani, Nasir Rajpoot, Syed Ali Khurram and Hassan Aqeel Khan, "On Smart Gaze based Annotation of Histopathology Images for Training of Deep Convolutional Neural Networks", submitted to IEEE Journal of Biomedical and Health Informatics.

Requirements

- Openslide

- Python 3.7

Installation and Setup

-

Install openslide. This process is different depending on the operating system.

Windows

- Download 64-bit Windows Binaries from the openslide download page. Direct link to download the latest version at the time of writing.

- Extract the zip archive.

- Copy all

.dllfiles frombintoC:/Windows/System32.

Debian/Ubuntu

# apt-get install openslide-toolsArch Linux

$ git clone https://aur.archlinux.org/openslide.git $ cd openslide $ makepkg -si

macOS

$ brew install openslide -

For some operating systems, tkinter needs to be installed as well.

Debian/Ubuntu

# apt-get install python3-tkArch Linux

# pacman -S tk -

(Optional) If recording gaze points using a tracker, install the necessary software from its website.

-

Clone this repository.

git clone https://github.com/UmarJ/lsiv-python3.git visnotate cd visnotate -

Create and activate a new python virtual environment if needed. Then install required python modules.

python -m pip install -r requirements.txt -

(Optional) Start gaze tracking software in the background if tracking gaze points.

-

Run

interface_recorder.py.python interface_recorder.py

Supported Hardware and Software

At this time visinotate supports the GazePoint GP3, tracking hardware. WSI's are read using openslide software and we support only the .svs file format. We do have plans to add support for other gaze tracking hardware and image formats later.

Screenshots

The Visnotate Interface

Collected Gazepoints

Generated Heatmap

Reference

This repo was used to generate the results for the following paper on Gaze-based labelling of Pathology data.

- Komal Mariam, Osama Mohammed Afzal, Wajahat Hussain, Muhammad Umar Javed, Amber Kiyani, Nasir Rajpoot, Syed Ali Khurram and Hassan Aqeel Khan, "On Smart Gaze based Annotation of Histopathology Images for Training of Deep Convolutional Neural Networks", submitted to IEEE Journal of Biomedical and Health Informatics.

BibTex Reference: Available after acceptance.