DropAttack: A Masked Weight Adversarial Training Method to Improve Generalization of Neural Networks

Abstract: Adversarial training has been proven to be a powerful regularization method to improve generalization of models. In this work, a novel masked weight adversarial training method, DropAttack, is proposed for improving generalization potential of neural network models. It enhances the coverage and diversity of adversarial attack by intentionally adding worst-case adversarial perturbations to both the input and hidden layers and randomly masking the attack perturbations on a certain proportion weight parameters. It then improves the generalization of neural networks by minimizing the internal adversarial risk generated by exponentially different attack combinations. Further, the method is a general technique that can be adopted to a wide variety of neural networks with different architectures. To validate the effectiveness of the proposed method, five public datasets were used in the fields of natural language processing (NLP) and computer vision (CV) for experimental evaluating. This study compared DropAttack with other adversarial training methods and regularization methods. It was found that the proposed method achieves state-of-the-art performance on all datasets. In addition, the experimental results of this study show that DropAttack method can achieve similar performance when it uses only a half training data required in standard training. Theoretical analysis revealed that DropAttack can perform gradient regularization at random on some of the input and weight parameters of the model. Further, visualization experiments of this study show that DropAttack can push the minimum risk of the neural network model to a lower and flatter loss landscapes.

-

For technical details and additional experimental results, please refer to our paper:

-

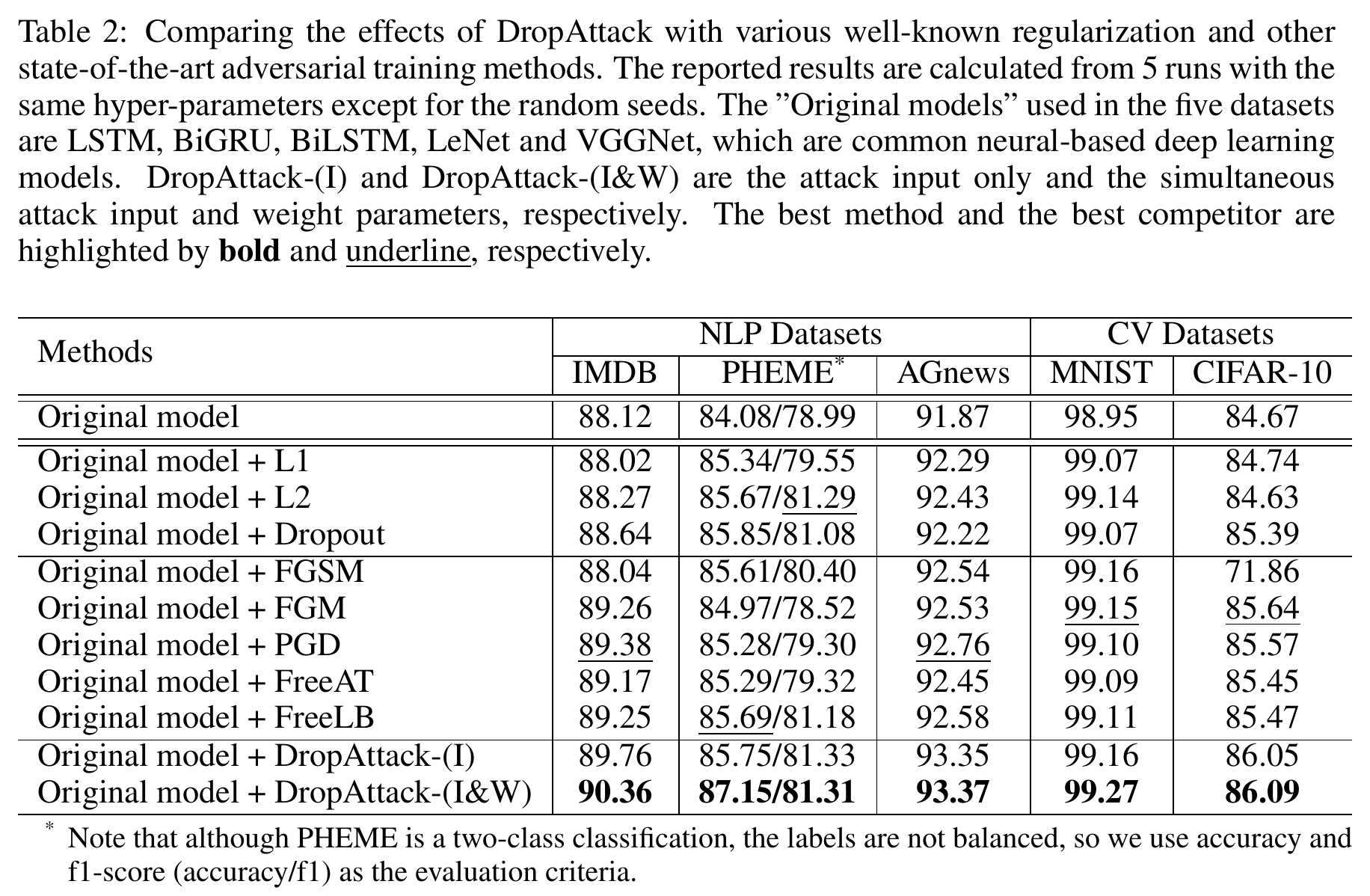

Experimental results:

DropAttack indeed selects flatter loss landscapes via masked adversarial perturbations.

[The code of loss visualization]

-

Citation

@article{ni2021dropattack,

title={DropAttack: A Masked Weight Adversarial Training Method to Improve Generalization of Neural Networks},

author={Ni, Shiwen and Li, Jiawen and Kao, Hung-Yu},

journal={arXiv preprint arXiv:2108.12805},

year={2021}

}

-

Requirements

pytorch

pandas

numpy

nltk

sklearn

torchtext

-

Please star it, thank you! :)