RITA DSL

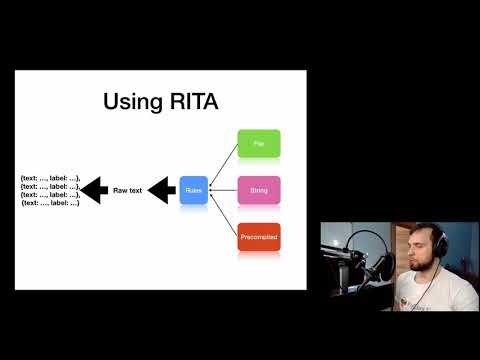

This is a language, loosely based on language Apache UIMA RUTA, focused on writing manual language rules, which compiles into either spaCy compatible patterns, or pure regex. These patterns can be used for doing manual NER as well as used in other processes, like retokenizing and pure matching

An Introduction Video

Links

- Website

- Live Demo

- Simple Chat bot example

- Documentation

- QuickStart

- Language Syntax Plugin for IntelijJ based IDEs

Support

Install

pip install rita-dsl

Simple Rules example

rules = """

cuts = {"fitted", "wide-cut"}

lengths = {"short", "long", "calf-length", "knee-length"}

fabric_types = {"soft", "airy", "crinkled"}

fabrics = {"velour", "chiffon", "knit", "woven", "stretch"}

{IN_LIST(cuts)?, IN_LIST(lengths), WORD("dress")}->MARK("DRESS_TYPE")

{IN_LIST(lengths), IN_LIST(cuts), WORD("dress")}->MARK("DRESS_TYPE")

{IN_LIST(fabric_types)?, IN_LIST(fabrics)}->MARK("DRESS_FABRIC")

"""

Loading in spaCy

import spacy

from rita.shortcuts import setup_spacy

nlp = spacy.load("en")

setup_spacy(nlp, rules_string=rules)

And using it:

>>> r = nlp("She was wearing a short wide-cut dress")

>>> [{"label": e.label_, "text": e.text} for e in r.ents]

[{'label': 'DRESS_TYPE', 'text': 'short wide-cut dress'}]

Loading using Regex (standalone)

import rita

patterns = rita.compile_string(rules, use_engine="standalone")

And using it:

>>> list(patterns.execute("She was wearing a short wide-cut dress"))

[{'end': 38, 'label': 'DRESS_TYPE', 'start': 18, 'text': 'short wide-cut dress'}]