Python library for the analysis of dynamic measurements

The goal of this library is to provide a starting point for users in metrology and related areas who deal with time-dependent i.e., dynamic, measurements. The initial version of this software was developed as part of a joint research project of the national metrology institutes from Germany and the UK, i.e. Physikalisch-Technische Bundesanstalt and the National Physical Laboratory.

Further development and explicit use of PyDynamic is part of the European research project EMPIR 17IND12 Met4FoF and the German research project FAMOUS.

Table of content

- Quickstart

- Features

- Module diagram

- Documentation

- Installation

- Contributing

- Examples

- Roadmap

- Citation

- Acknowledgement

- Disclaimer

- License

Quickstart

To dive right into it, install PyDynamic and execute one of the examples:

(my_PyDynamice_venv) $ pip install PyDynamic

Collecting PyDynamic

[...]

Successfully installed PyDynamic-[...]

(my_PyDynamice_venv) $ python

Python 3.9.7 (default, Aug 31 2021, 13:28:12)

[GCC 11.1.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> from PyDynamic.examples.uncertainty_for_dft.deconv_DFT import DftDeconvolutionExample

>>> DftDeconvolutionExample()

Propagating uncertainty associated with measurement through DFT

Propagating uncertainty associated with calibration data to real and imag part

Propagating uncertainty through the inverse system

Propagating uncertainty through the low-pass filter

Propagating uncertainty associated with the estimate back to time domain

You will see a couple of plots opening up to observe the results. For further information just read on and visit our tutorial section.

Features

PyDynamic offers propagation of uncertainties for

- application of the discrete Fourier transform and its inverse

- filtering with an FIR or IIR filter with uncertain coefficients

- design of a FIR filter as the inverse of a frequency response with uncertain coefficients

- design on an IIR filter as the inverse of a frequency response with uncertain coefficients

- deconvolution in the frequency domain by division

- multiplication in the frequency domain

- transformation from amplitude and phase to a representation by real and imaginary parts

- 1-dimensional interpolation

For the validation of the propagation of uncertainties, the Monte-Carlo method can be applied using a memory-efficient implementation of Monte-Carlo for digital filtering.

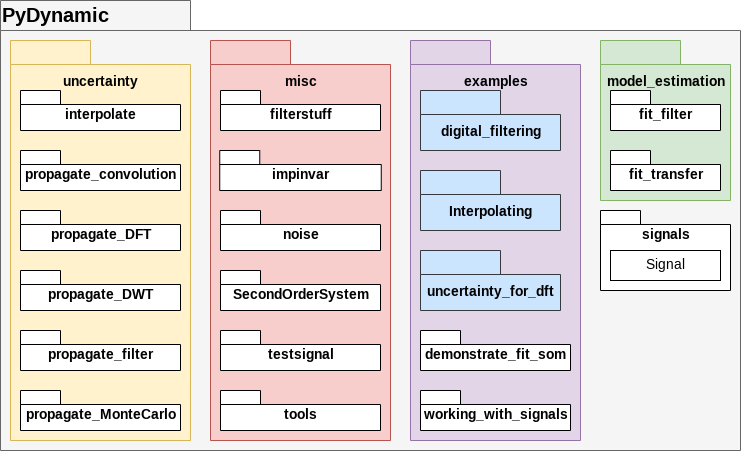

Module diagram

The fundamental structure of PyDynamic is shown in the following figure.

However, imports should generally be possible without explicitly naming all packages and modules in the path, so that for example the following import statements are all equivalent.

from PyDynamic.uncertainty.propagate_filter import FIRuncFilter

from PyDynamic.uncertainty import FIRuncFilter

from PyDynamic import FIRuncFilter

Documentation

The documentation for PyDynamic can be found on ReadTheDocs

Installation

The installation of PyDynamic is as straightforward as the Python ecosystem suggests. Detailed instructions on different options to install PyDynamic you can find in the installation section of the docs.

Contributing

Whenever you are involved with PyDynamic, please respect our Code of Conduct . If you want to contribute back to the project, after reading our Code of Conduct, take a look at our open developments in the project board , pull requests and search the issues . If you find something similar to your ideas or troubles, let us know by leaving a comment or remark. If you have something new to tell us, feel free to open a feature request or bug report in the issues. If you want to contribute code or improve our documentation, please check our contribution advices and tips.

If you have downloaded this software, we would be very thankful for letting us know. You may, for instance, drop an email to one of the authors (e.g. Sascha Eichstädt, Björn Ludwig or Maximilian Gruber )

Examples

We have collected extended material for an easier introduction to PyDynamic in the package examples. Detailed assistance on getting started you can find in the corresponding sections of the docs:

In various Jupyter Notebooks and scripts we demonstrate the use of the provided methods to aid the first steps in PyDynamic. New features are introduced with an example from the beginning if feasible. We are currently moving this supporting collection to an external repository on GitHub. They will be available at github.com/PTB-M4D/PyDynamic_tutorials in the near future.

Roadmap

- Implementation of robust measurement (sensor) models

- Extension to more complex noise and uncertainty models

- Introducing uncertainty propagation for Kalman filters

For a comprehensive overview of current development activities and upcoming tasks, take a look at the project board, issues and pull requests.

Citation

If you publish results obtained with the help of PyDynamic, please use the above linked Zenodo DOI for the code itself or cite

Sascha Eichstädt, Clemens Elster, Ian M. Smith, and Trevor J. Esward Evaluation of dynamic measurement uncertainty – an open-source software package to bridge theory and practice J. Sens. Sens. Syst., 6, 97-105, 2017, DOI: 10.5194/jsss-6-97-2017

Acknowledgement

Part of this work is developed as part of the Joint Research Project 17IND12 Met4FoF of the European Metrology Programme for Innovation and Research (EMPIR).

This work was part of the Joint Support for Impact project 14SIP08 of the European Metrology Programme for Innovation and Research (EMPIR). The EMPIR is jointly funded by the EMPIR participating countries within EURAMET and the European Union.

Disclaimer

This software is developed at Physikalisch-Technische Bundesanstalt (PTB). The software is made available "as is" free of cost. PTB assumes no responsibility whatsoever for its use by other parties, and makes no guarantees, expressed or implied, about its quality, reliability, safety, suitability or any other characteristic. In no event will PTB be liable for any direct, indirect or consequential damage arising in connection with the use of this software.

License

PyDynamic is distributed under the LGPLv3 license except for the module impinvar.py in the package misc , which is distributed under the GPLv3 license .